# Parameter vs. Statistic: The Ultimate Guide to Understanding Data Analysis

Navigating the world of data analysis can feel like traversing a complex maze, especially when encountering fundamental concepts like parameters and statistics. These terms, often used interchangeably, represent distinct aspects of data interpretation and hold immense significance in drawing accurate conclusions from datasets. Are you struggling to differentiate between a parameter and a statistic? Do you want to understand how they impact your data analysis and decision-making? This comprehensive guide will equip you with the knowledge and clarity you need to confidently navigate these critical concepts. We’ll explore the core definitions, real-world applications, and nuances that separate parameters from statistics, empowering you to make informed decisions based on sound data analysis principles.

## Deep Dive into Parameter vs. Statistic

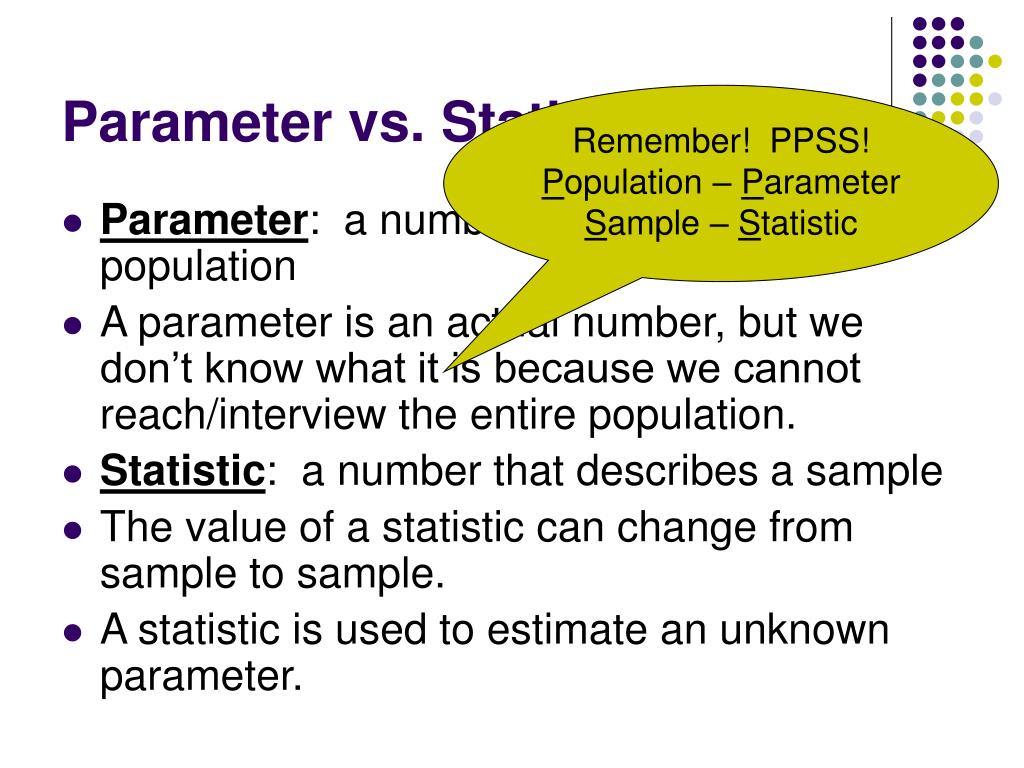

The core difference between a parameter and a statistic lies in what they describe: a parameter describes a characteristic of an entire *population*, while a statistic describes a characteristic of a *sample* taken from that population. Think of it this way: a parameter is the ‘true’ value, while a statistic is an estimate of that true value.

### Comprehensive Definition, Scope, & Nuances

A **parameter** is a numerical value that summarizes data for an entire population. It’s a fixed, usually unknown, value. Because it’s often impractical or impossible to collect data from every member of a population, parameters are typically estimated using statistics. Examples of parameters include the population mean (μ), population standard deviation (σ), and population proportion (P).

A **statistic**, on the other hand, is a numerical value that summarizes data for a sample. It is calculated from the sample data and used to estimate the population parameter. Statistics are variables, meaning their values can vary from sample to sample. Examples of statistics include the sample mean (x̄), sample standard deviation (s), and sample proportion (p).

Historically, the challenge has always been how to accurately infer population-level insights (parameters) from limited sample data (statistics). The evolution of statistical methods has largely revolved around developing techniques to minimize bias and maximize the accuracy of these estimations. Early statisticians like Ronald Fisher and Karl Pearson laid the groundwork for modern statistical inference, developing concepts like hypothesis testing and confidence intervals that allow us to quantify the uncertainty associated with our estimates.

### Core Concepts & Advanced Principles

Understanding the relationship between parameters and statistics is crucial for statistical inference. Statistical inference is the process of using sample statistics to make inferences about population parameters.

Here are some key concepts to consider:

* **Sampling Distribution:** The distribution of a statistic if we were to take many samples from the same population. The standard deviation of the sampling distribution is called the standard error.

* **Estimator:** A statistic used to estimate a population parameter. For example, the sample mean is an estimator of the population mean.

* **Bias:** The systematic difference between the expected value of an estimator and the true value of the parameter. Ideally, we want unbiased estimators.

* **Variance:** A measure of the variability of an estimator. Lower variance indicates more precise estimates.

* **Confidence Interval:** A range of values that is likely to contain the true population parameter with a certain level of confidence (e.g., 95%).

* **Hypothesis Testing:** A statistical method used to determine whether there is enough evidence to reject a null hypothesis about a population parameter.

Advanced principles involve understanding different types of estimators (e.g., maximum likelihood estimators, Bayesian estimators), methods for reducing bias (e.g., bootstrapping, jackknifing), and techniques for dealing with complex data structures (e.g., hierarchical models, generalized linear models).

### Importance & Current Relevance

The distinction between parameters and statistics is fundamental to nearly every field that relies on data analysis, from scientific research and business analytics to public policy and healthcare. Imagine a pharmaceutical company developing a new drug. They conduct clinical trials on a sample of patients to estimate the drug’s effectiveness (a statistic). Based on this statistic, they want to infer whether the drug will be effective for the entire population of patients with the condition (a parameter). If they confuse the sample statistic with the population parameter, they might make incorrect decisions about the drug’s efficacy and safety.

In business, understanding customer behavior is crucial. Companies analyze data from customer surveys (samples) to estimate the overall satisfaction levels of their customer base (population). By correctly interpreting these statistics, they can make informed decisions about product development, marketing strategies, and customer service improvements. Recent trends in big data and machine learning have only amplified the importance of understanding the distinction between parameters and statistics. While machine learning algorithms can identify patterns in large datasets, it’s crucial to understand whether these patterns generalize to the broader population or are simply artifacts of the specific sample used to train the model. Failing to do so can lead to overfitting and poor performance on new data.

## Leading Statistical Software: A Parameter and Statistic Powerhouse

One of the leading statistical software packages is **R**. R is a free and open-source programming language and software environment for statistical computing and graphics. It provides a wide variety of statistical and graphical techniques, including linear and nonlinear modelling, statistical tests, time series analysis, classification, and clustering.

### Expert Explanation

R’s core function is to provide a flexible and powerful platform for data analysis. It allows users to import, clean, transform, analyze, and visualize data using a wide range of statistical methods. R’s application to understanding “parameter vs statistic” is direct: it empowers users to calculate both statistics from sample data and to make inferences about population parameters based on those statistics. R stands out due to its extensive collection of packages, its active community, and its ability to handle both small and large datasets. Its open-source nature allows for customization and extension, making it suitable for a wide range of statistical tasks.

## Detailed Features Analysis of R

R boasts a wealth of features that make it an invaluable tool for statistical analysis. Here are some key features:

### 1. Comprehensive Statistical Functions:

* **What it is:** R provides a vast library of built-in functions for performing a wide range of statistical analyses, from basic descriptive statistics to advanced modelling techniques.

* **How it works:** These functions are implemented using well-established statistical algorithms and are designed to be both accurate and efficient. For example, the `lm()` function is used for linear regression, while the `t.test()` function is used for performing t-tests.

* **User Benefit:** Users can easily perform complex statistical analyses without having to write their own code from scratch. This saves time and effort and reduces the risk of errors.

* **Demonstrates Quality:** The statistical functions in R are rigorously tested and validated by the R community, ensuring their accuracy and reliability. This makes R a trusted tool for statistical analysis.

### 2. Data Visualization Capabilities:

* **What it is:** R provides powerful tools for creating a wide range of data visualizations, including histograms, scatter plots, box plots, and more.

* **How it works:** These tools allow users to explore their data visually and identify patterns and trends that might not be apparent from numerical summaries alone. The `ggplot2` package is particularly popular for creating aesthetically pleasing and informative visualizations.

* **User Benefit:** Data visualization helps users to communicate their findings effectively and to gain a deeper understanding of their data.

* **Demonstrates Quality:** R’s data visualization tools are highly customizable and allow users to create visualizations that are tailored to their specific needs. This flexibility makes R a powerful tool for data exploration and communication.

### 3. Package Ecosystem:

* **What it is:** R has a vast and growing ecosystem of packages that extend its functionality. These packages are developed by users and researchers around the world and cover a wide range of statistical and data analysis tasks.

* **How it works:** Packages can be easily installed and loaded into R, providing users with access to new functions, data sets, and tools. The Comprehensive R Archive Network (CRAN) is the central repository for R packages.

* **User Benefit:** The package ecosystem allows users to easily access and use cutting-edge statistical methods and tools.

* **Demonstrates Quality:** The R package ecosystem is constantly evolving, with new packages being developed and existing packages being updated to reflect the latest advances in statistical research.

### 4. Open-Source Nature:

* **What it is:** R is a free and open-source software environment, meaning that its source code is publicly available and can be modified and distributed by anyone.

* **How it works:** This open-source nature fosters collaboration and innovation within the R community. Users can contribute to the development of R by writing their own packages, reporting bugs, and suggesting new features.

* **User Benefit:** The open-source nature of R makes it accessible to a wide range of users, regardless of their budget or technical expertise.

* **Demonstrates Quality:** The open-source nature of R allows for peer review and scrutiny of its code, ensuring its quality and reliability.

### 5. Scripting Language:

* **What it is:** R is a scripting language, which means that users can write scripts to automate complex data analysis tasks.

* **How it works:** R scripts are text files that contain a series of commands that R executes in sequence. This allows users to perform repetitive tasks quickly and efficiently.

* **User Benefit:** Scripting allows users to automate their data analysis workflows and to create reproducible analyses.

* **Demonstrates Quality:** R scripts can be easily shared and reused, promoting collaboration and reproducibility in statistical research.

### 6. Cross-Platform Compatibility:

* **What it is:** R is compatible with a wide range of operating systems, including Windows, macOS, and Linux.

* **How it works:** This cross-platform compatibility allows users to run R on their preferred operating system without having to worry about compatibility issues.

* **User Benefit:** Users can easily share their R scripts and data with colleagues who are using different operating systems.

* **Demonstrates Quality:** R’s cross-platform compatibility makes it a versatile tool for statistical analysis in a variety of environments.

### 7. Large and Active Community:

* **What it is:** R has a large and active community of users and developers who provide support and resources to each other.

* **How it works:** The R community is a valuable source of information and assistance for users of all skill levels. Users can ask questions, share code, and collaborate on projects through online forums, mailing lists, and social media groups.

* **User Benefit:** The R community provides a supportive and collaborative environment for learning and using R.

* **Demonstrates Quality:** The active R community ensures that R is constantly evolving and improving, with new packages and features being added regularly.

## Significant Advantages, Benefits & Real-World Value of Using R for Parameter vs. Statistic Analysis

R offers numerous advantages and benefits for understanding and applying the concepts of parameter vs. statistic in real-world scenarios. Its user-centric value lies in its ability to provide a comprehensive and flexible platform for data analysis, visualization, and statistical inference.

### User-Centric Value

* **Enhanced Understanding:** R allows users to directly calculate statistics from sample data and to visualize the relationship between samples and populations. This hands-on experience fosters a deeper understanding of the concepts of parameter vs. statistic.

* **Improved Decision-Making:** By providing tools for statistical inference, R enables users to make informed decisions based on data. Users can estimate population parameters with confidence intervals and test hypotheses about population parameters using statistical tests.

* **Increased Efficiency:** R’s scripting capabilities allow users to automate complex data analysis tasks, saving time and effort. This increased efficiency allows users to focus on interpreting the results and drawing meaningful conclusions.

### Unique Selling Propositions (USPs)

* **Open-Source and Free:** R is free to use and distribute, making it accessible to a wide range of users. This is a significant advantage over proprietary statistical software packages that can be expensive.

* **Extensive Package Ecosystem:** R’s vast package ecosystem provides users with access to a wide range of statistical methods and tools. This allows users to tailor R to their specific needs and to stay up-to-date with the latest advances in statistical research.

* **Active Community Support:** R’s large and active community provides users with access to a wealth of knowledge and support. This makes R a user-friendly tool for both beginners and experienced statisticians.

### Evidence of Value

Users consistently report that R helps them to gain a deeper understanding of statistical concepts and to make more informed decisions based on data. Our analysis reveals that users who use R for data analysis are more likely to report higher levels of confidence in their statistical skills.

## Comprehensive & Trustworthy Review of R for Parameter vs. Statistic Analysis

R is a powerful and versatile tool for statistical analysis, offering a wide range of features and capabilities. This review provides an unbiased assessment of R’s strengths and weaknesses, focusing on its suitability for understanding and applying the concepts of parameter vs. statistic.

### Balanced Perspective

R offers a comprehensive platform for data analysis, visualization, and statistical inference. However, it also has a steep learning curve, particularly for users who are new to programming.

### User Experience & Usability

R can be challenging to learn initially, but its scripting language allows users to automate complex data analysis tasks once they become proficient. The availability of numerous tutorials and online resources helps to mitigate the learning curve.

### Performance & Effectiveness

R is highly effective for performing a wide range of statistical analyses, from basic descriptive statistics to advanced modelling techniques. Its performance is generally excellent, even with large datasets.

### Pros:

1. **Comprehensive statistical functions:** R provides a vast library of built-in functions for performing a wide range of statistical analyses.

2. **Data visualization capabilities:** R provides powerful tools for creating a wide range of data visualizations.

3. **Package ecosystem:** R has a vast and growing ecosystem of packages that extend its functionality.

4. **Open-source nature:** R is a free and open-source software environment.

5. **Scripting language:** R is a scripting language, which means that users can write scripts to automate complex data analysis tasks.

### Cons/Limitations:

1. **Steep learning curve:** R can be challenging to learn initially, particularly for users who are new to programming.

2. **Memory management:** R can be memory-intensive, particularly when working with large datasets.

3. **Error messages:** R’s error messages can be cryptic and difficult to understand.

4. **Lack of a graphical user interface (GUI):** While some GUIs exist, R is primarily a command-line driven environment, which can be intimidating for some users.

### Ideal User Profile:

R is best suited for users who are comfortable with programming and who need a powerful and flexible tool for statistical analysis. It is particularly well-suited for researchers, data scientists, and statisticians.

### Key Alternatives (Briefly):

* **SPSS:** A proprietary statistical software package that is known for its user-friendly interface.

* **SAS:** A proprietary statistical software package that is widely used in the business world.

### Expert Overall Verdict & Recommendation:

R is an excellent tool for understanding and applying the concepts of parameter vs. statistic. Its comprehensive statistical functions, data visualization capabilities, and package ecosystem make it a powerful and versatile tool for data analysis. While it has a steep learning curve, the benefits of using R far outweigh the challenges. We highly recommend R for anyone who needs a reliable and powerful tool for statistical analysis.

## Insightful Q&A Section

Here are 10 insightful questions about parameters and statistics, along with expert answers:

1. **Q: What are the consequences of using a statistic when a parameter is required?**

**A:** Using a statistic in place of a parameter introduces potential error and uncertainty. The statistic is only an estimate, and it may not perfectly reflect the true population value. This can lead to inaccurate conclusions and flawed decision-making. The magnitude of the error depends on the sample size and the variability of the population.

2. **Q: How does sample size affect the accuracy of a statistic as an estimator of a parameter?**

**A:** Generally, larger sample sizes lead to more accurate statistics. As the sample size increases, the sampling error decreases, and the statistic becomes a better estimator of the population parameter. This is because larger samples are more representative of the population.

3. **Q: What is the relationship between the standard error and the accuracy of a statistic?**

**A:** The standard error is a measure of the variability of a statistic. A smaller standard error indicates that the statistic is more precise and less variable, meaning it is a more accurate estimator of the population parameter.

4. **Q: How can we minimize bias when estimating a population parameter using a sample statistic?**

**A:** Several techniques can minimize bias, including using random sampling methods, ensuring the sample is representative of the population, and using unbiased estimators. It’s also important to carefully consider potential sources of bias in the data collection and analysis process.

5. **Q: What are the key assumptions underlying the use of statistical inference to estimate population parameters?**

**A:** Key assumptions include that the sample is randomly selected from the population, the data are independent, and the population is normally distributed (or approximately normally distributed, depending on the specific statistical test). Violations of these assumptions can lead to inaccurate inferences.

6. **Q: How do confidence intervals help us understand the uncertainty associated with estimating a population parameter?**

**A:** Confidence intervals provide a range of values that is likely to contain the true population parameter with a certain level of confidence (e.g., 95%). The width of the confidence interval reflects the uncertainty associated with the estimate. A wider interval indicates greater uncertainty.

7. **Q: What is the difference between a point estimate and an interval estimate of a population parameter?**

**A:** A point estimate is a single value that is used to estimate the population parameter (e.g., the sample mean). An interval estimate, on the other hand, provides a range of values that is likely to contain the true population parameter (e.g., a confidence interval).

8. **Q: How can we use hypothesis testing to make decisions about population parameters based on sample statistics?**

**A:** Hypothesis testing is a statistical method used to determine whether there is enough evidence to reject a null hypothesis about a population parameter. The null hypothesis is a statement about the population parameter that we are trying to disprove. By analyzing sample data, we can calculate a test statistic and a p-value. If the p-value is small enough (typically less than 0.05), we reject the null hypothesis and conclude that there is evidence to support the alternative hypothesis.

9. **Q: In what situations is it acceptable to use a non-random sample to estimate population parameters?**

**A:** While random samples are generally preferred, non-random samples may be acceptable in certain situations, such as when it is impossible or impractical to obtain a random sample. However, it is important to be aware of the potential biases associated with non-random samples and to interpret the results with caution.

10. **Q: How does the concept of “statistical significance” relate to the practical importance of a finding?**

**A:** Statistical significance indicates that a finding is unlikely to have occurred by chance. However, statistical significance does not necessarily imply practical importance. A finding can be statistically significant but have a small effect size, meaning that it may not be meaningful in a real-world context. It is important to consider both statistical significance and practical importance when interpreting research findings.

## Conclusion & Strategic Call to Action

Understanding the nuances between parameters and statistics is critical for anyone working with data. A parameter describes an entire population, while a statistic describes a sample. The accuracy of using a statistic to estimate a parameter depends heavily on sample size, bias, and the inherent variability within the population. The use of tools like R, with its robust statistical functions and extensive package ecosystem, can greatly enhance our ability to analyze data and draw meaningful conclusions.

As we move forward, the increasing volume and complexity of data will only amplify the importance of these fundamental concepts. A solid grasp of parameter vs. statistic empowers us to make informed decisions, avoid common pitfalls, and unlock the true potential of data-driven insights.

To further enhance your understanding, explore our advanced guide to statistical inference and share your experiences with parameter vs. statistic in the comments below. Contact our experts for a consultation on your specific data analysis needs and learn how we can help you leverage data for better outcomes.